Every day, we're reminded that there's something unsettling going on with men and boys.

In American politics, polling shows them breaking hard for Trump. In British politics, there's a substantial gap between men and women's support for Reform, especially among younger voters. On television, we watch dramas about young boys driven mad by the online ecosystem. Online, we read about a manosphere of misogynistic entrepreneurs, trying to turn male alienation into money. In school, we see boys perform worse than girls across pretty much every metric, with the sole exception of maths. After school, we find that boys are less likely to go into higher education than girls. In society, we discover that they're three times more likely than women to commit suicide. It goes on and on.

There's a small army of men who have capitalised on this phenomenon. On the more respectable end, there's Jordan B Peterson. I say respectable - he is, when you pay any degree of serious attention to what he's saying, almost completely insane - but in this context he is on the more urbane end of the spectrum. On the other end, we have figures like Andrew Tate, who unfortunately need no introduction. In the middle are the male influencer set, charging impossible amounts of money for insecure men to attend crash-courses on how to attract women.

As one of the 'alpha male boot camp' organisers told attendees: In the past, "low-status men got at least one girl that they could have sex with. Then, after birth control and the sexual revolution, we allowed people to choose more, and what women were choosing was the high-status men. Which is why you guys are here."

As you can tell by that quote, what's sold as a kind of men's self-help project is in fact an ideological programme grounded in hazy eugenics, Social Darwinism, latent anti-60s backlash and free market economics.

Under this worldview, human romantic and sexual attraction is interpreted as supply and demand, with people basically reduced to the status of goods traded on a network. If you see a prettier woman than the one you're dating, you trade in your old model and go for the updated one, the same way you would a phone. Women are expected to do the same. Everyone is a consumer - of human lives, as well as gadgets.

The basis by which the human consumers choose whether to rent or purchase each other is based on perceived social status. In men's case, this is denoted through strength, wealth, and confidence. The task for low status men is to either become, or to appear like, high status men. This makes up the vast majority of conversation on this topic online - a perpetual frenzied assessment of status. Incels supposedly have no sex and pick-up artists supposedly have lots of it, but they are entirely united by their basic world view.

This is a grim world. Humans are resources. High status humans are a scarce resource. You must become the strongest richest male, the dominant chimpanzee, to consume the best female resources. It is also a zero-sum world. It insists that to get what you want you must out-compete and exploit others. Everyone is out for themselves in the jungle. It has no social conscience. It is as self-concerned as it is self-involved.

Obviously, this is appalling. But more importantly, it is false. It is a lie. And because it is a lie, it will not actually get men what they want. It will not make them more desirable, in fact quite the opposite. It'll turn them into resentful, pathological crybaby freaks.

And where is the counter-narrative? The progressive left seemingly has no opinion on men getting laid. It has opinions on everything else. It has opinions on women getting laid (good for them), it has opinions on toxic masculinity (bad) and it has opinions on gender equality (there should be more of it). But it is completely silent on the subject of men getting laid - which, generally speaking, they are very interested in doing, and will continue to be interested in regardless of whether progressives want to talk about it. So the stage has been left entirely clear for the far-right to set the narrative, which it has done with devastating effect, monopolising young men's obsession with sex to spread a vicious fictitious storyline about power and identity.

What would a progressive narrative look like? First, it would not be motivated by fear and hatred of women. Second, it would consider masculinity healthy and valuable rather than being ashamed of it. Third, it would be comfortable talking about the fact that men want to get laid and offer advice for how they might do that if it's something they're struggling with. And finally, it would provide a true assessment of romance and attraction - one in which they were not societally zero-sum, but win-win.

That assessment would be grounded in the following truth: That the things which men can do to make themselves more attractive are things which actually improve society.

Subscribe now

Things are really intense when you're young. Sexual rejection is basically an existential experience. This is what makes young boys particularly vulnerable to bullshit artists online and it's why we're willing to erect whole ideological structures on the basis of someone just not being that into us.

It never occurs to you that someone simply didn't fancy you. Instead, their lack of interest is a rejection of your entire self. Not only is nothing about you valuable or attractive, nothing ever will be. Your character, your physique, your smell, your interests, your disposition, your life chances, your eyebrows - every one of these things have been refused in a take-it-or-leave-it bundle.

Early rejection therefore sting in a way that it will never sting again. It can plunge teenagers into a pit of self-hatred and savage, knife-wielding introspection. We watch the one we pined over go off with someone else and we feel a desperate sense of need and despair, a challenge not to our good fortune but to our whole sense of identity.

This doesn't necessarily get much better in our 20s. At this age, we often experience fierce sexual jealousy. The idea of your partner cheating on you can feel physically unbearable, because it is interpreted as a fundamental judgement on your adequacy as a man: physically, emotionally and socially, in terms of status.

This is the paranoia and insecurity that lies behind the whole dictionary of female cheating, from Chaucer's 'cuckold' to Twitter's 'cuck'. It establishes a rigidly hierarchical structure of male status and then insists that any unfortunate incident - she doesn't fancy you, she cheated on you - can only be explained by your low ranking within it.

None of this is true. It's all bullshit, invented by insecure men to make other men feel as petrified as they are.

The most important thing I ever read about dating was from a male writer who, upon being rejected one time, simply said: 'It's OK. I'm not for everyone.' This should be a mantra. It should, ideally, be accompanied with a shrug. Deeply internalising this sentiment will be the most liberating thing you can do.

This is the real reason why someone doesn't fancy you. It's not because you are unfanciable. It's not because you are fundamentally useless as a human being. It's not because you're minging. It's because you're not for everyone and as it happens you're not for this one. In the future, there will be women you are for: beautiful, funny, mischievous women. You just weren't for this one. And this one, incidentally, will have guys she's not for either.

People do not really cheat because they have found someone of a higher status and are unable to resist them. They cheat because they need the excitement of a fling to counteract the monotony of normal life, or their partner has started taking them for granted, or it had been too long since someone told them they were beautiful, or they're addicted to risk-taking, or their relationship is in a state of disrepair, or they need fresh sexual attention to alleviate their insecurity, or they just got pissed and fucked up.

As you get older, you begin to realise the boring, reassuring truth of all this. You don't have some great moment of revelation. You basically just increase the data set. With a data set of one, the teenager decides that a single rejection is a universal rejection for all time. With a much larger data set, the 30-year-old knows that other people will fancy them even if this one in particular doesn't. It sucks. But it sucks much less.

Subscribe now

Something else happens as you get older. Your idea of status becomes more complex and nuanced. In school, there are very clear frameworks for establishing success and high premiums on securing them. There is a particular emphasis on sport. There are only a set number of players for the team. There is one man of the match. There are winners and there are losers.

None of this suited me. I am very bad at nearly every activity which is coded as male. I am shit at every sport. I mean genuinely every single one. I have no sense of direction. I use my phone's maps function to navigate city streets I have lived in for a quarter of a century. My sense of spatial awareness is basically non-existent. I cannot drive. I failed my driving test five times. Indeed, I can't really be in charge of any vehicle, because I'll crash it. I actually hate all talk of vehicles - of car types, or brands, or horsepower, or - worst of all - routes. The entire subcategory of British male conversation based on routes - 'we got caught in a traffic jam on the M3', 'we took the Northern line then the overground to Kensal Rise' - drives me to despair. 'Who the fuck gives a fuck how you go here,' I think silently, 'you're here now and you're boring me to fucking tears.' I do not want to man the barbeque. I can't even comprehend what is going on in the minds of men when it comes to barbecues. You stare at them thinking: you're in insurance, you haven't become a hunter gatherer because you turned a sausage with a pair of tongs. I can't do DIY. I cannot open beer bottles by slamming them on the side of a table. My natural gestures are all camp. I could change them if I wanted to, but I don't want to. There's really almost no part of the traditional male world in which I am able to perform competently or even at all.

In school, this sort of thing is kind of a problem. The reason for that is that people keep making you do things you're evidently not good at and then evaluate your status on the basis of that performance. But after school, it stops. This is the thing, the crucial thing you must keep in mind throughout those years: eventually, it stops. If you don't like playing football you don't have to play football. If you don't like interpreting poems, you don't have to do that either. Your lifestyle starts to compliment your preferences, rather than rubbing up awkwardly against them. And the longer you spend in the world doing things you like and are good at, the more confident your demeanour becomes, the more natural you begin to feel in your skin.

Even if you struggle with all the standard definitions of masculinity - strength, confidence, sporting prowess - do not worry. There are other kinds of masculinity available to you that are just as attractive to women.

Subscribe now

The most dangerous and self-harming thing you can do as a man is to adopt the persona of the man you think you should be. That's what those bootcamps are really selling - an impersonation of masculinity, as understood through a rudimentary Social Darwinist frame. It's like thinking you can be an engineer because you bought a boiler suit and a spanner.

The key to real masculinity lies in the following point: It accomplishes social good. That sounds conscientious. It is. Proudly so. But it is also selfishly true. And these two things can coexist quite easily: your social conscience and your personal advantage.

The single most important masculine trait you can have is competence. Obviously, it isn't only men that have this trait and it isn't only women that find it attractive. But competence is much more often celebrated in masculinity than it is femininity. And, despite the fact that it is hardly ever mentioned in this type of discussion, it is probably the most desirable quality you can possess.

This comes in many forms. But in every single one of them, it can be learned.

There is the low-level daily type of competence: sorting the transport from the airport on holiday, dealing with the admin, booking where to eat, handling the insurance claim, making sure that damp problem in your hallway doesn't run out of control, clocking the bicycle that's going too fast and might hit someone you're with - taking care of the interminable daily chaff of life. This stuff is unimaginably boring, but it makes the people you're with feel protected.

Then there is the high-level professional type of competence: being good at whatever it is you have decided you want to do with your life, working hard to perfect the skills you possess, showing the discipline and work-ethic to accomplish it.

We all intuitively know this is attractive. This is partly the pleasure of action-films like John Wick - it's not just the presentation of the action, it is the joy of watching someone be terribly good at perpetrating it. It is the joy found in Aaron Sorkin dramas, or in watching professional sports, or in reality shows like Bake Off. It is simply very pleasant to watch someone do something very well.

Men get themselves into a terrible muddle when they become obsessed with securing female attention. It becomes a self-refuting prophecy. The more fixated they become, the more desperate they appear, and women can smell sexual desperation from the other side of the room. The best possible advice you can give to someone who is trying and failing to get this attention is to stop trying. If you run towards it, it will take a step away from you. If you turn your back on it, you will find it there in front of you.

Instead, dedicate yourself to your professional life or your personal interests. Whatever it is, paid or unpaid - a source of skill and accomplishment that you will seek to maximise through diligence and hard work. This will, in the end, make you much more attractive. You will present like what you have become: an impressive and talented person who cares about what they do, does it well, is in control of their life and can protect the people around them.

It will also, and this is not a bad corollary, do more good for the world. You will be operating in the most socially valuable way possible. You will have maximised your contribution.

You will make the world better. And by doing so you will improve yourself.

Subscribe now

You can see the same dynamic in the way you treat women. The key lesson here is very simple, although many men will never learn it: Don't objectify women, because it'll mess you up more than it messes them up.

I'm using this word in the old-school 1970s feminist way: turning a woman into an object. We don't deploy this phrase much anymore, I suppose because it became such a reflexive way of mocking feminism that people felt embarrassed to use it. In fact, it's a very helpful word.

How do the pick-up artists speak? As if a woman is a kind of safe, which requires the right code to access it. First you disparage her a bit, then make her laugh, then take your attention away: all this horrible manipulative gibberish. All of it completely useless. Even if you could sustain it, they would soon spot that there is something terribly wrong with you, as would most of the men you might want as friends. You will become a shell of a man, a character going through motions, while your inner life cascades towards complete personality failure.

There is a technique to talking to women which is far more effective. It is called: treat them like a fucking human being. Just actually talk to them. If you must, imagine that they are a man and then talk to them the way you would in that scenario. You will find that your status, if this is the key variable we're worrying about, has massively increased.

If you have a female friend on a dating site, ask her to do you a favour. Ask to see her inbox. It will be a highly revealing experience. There will be a lot of 'hey u ok?' There will be many obsequious introductions followed by suddenly aggressive responses if the woman doesn't reply. There will, of course, be unsolicited dick pics - less an appeal for approval than an attempted violation.

In short, there will be lots of messages from men who cannot really bring themselves to believe that these women have an internal life. They spent too much time watching the servile women of porn videos, or reading the crude IKEA-construction-guides of sexual attraction written by pick-up artists, or they simply view the whole enterprise like a maths puzzle where a certain number of introductions, no matter how redundant and lazy, will be able to trigger some sort of successful outcome.

Now consider how little warmth, humour and human authenticity it would take to stand out among these men. Shit, even the humour is often unnecessary. Simply being nice is enough. In fact, the importance of niceness is criminally underrated, both as a feature of humans in general and as a quality that attracts women. Nice guys do not finish last, except perhaps for in a few cut-throat industries. Generally speaking, they are the ones people want to work with, stay friends with, and spend time with. And people includes women.

Treating women like they are actual human beings will make you more attractive. It will also give you a richer, deeper life. We talk about the friendzone as if it was the worst thing that could happen to someone. Sometimes people will go further and tell you that men and women simply can never be friends. They will actively encourage you to impoverish your life in the same way they have theirs. In fact, life without friendships with the opposite sex is a barren, pointlessly stunted thing. There is probably no worse emotional punishment you can inflict on yourself.

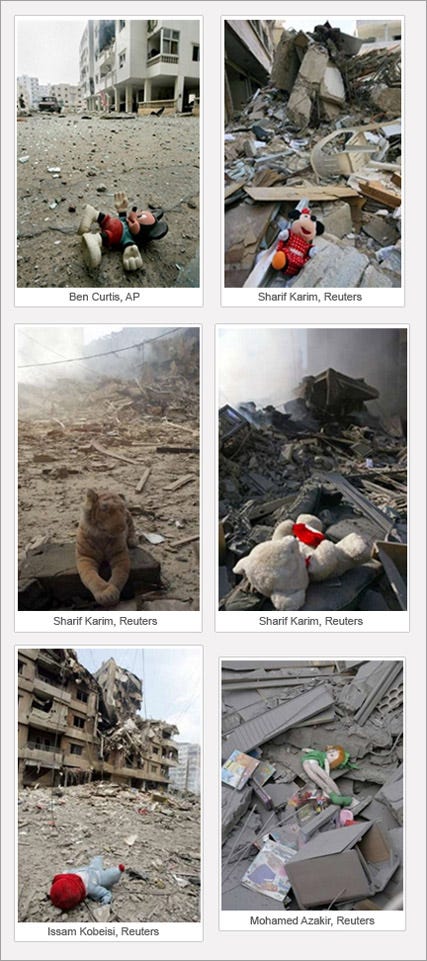

Society is also improved by this approach. Men's treatment of women like objects isn't just about sexualisation - it's about making them into opaque things, objects of haunting indecipherable mystery which we cannot understand or therefore empathise with. That is where so many of our current problems come from - the chasm of incomprehension and the snarling vicious myths about status and power which are cultivated within it.

Subscribe now

This is one of the great privileges of being a man. The manosphere presents us with a zero-sum world in which the advantage of the individual comes at the cost of society. It places all of us under the yoke of a rigid social hierarchy.

There are some circumstances in which the net-zero analysis is correct. But generally we live in a world of mutual benefit, secured through decency and authenticity. The things you do which can make you more attractive are also the things which improve the society you live in.

You can have the life you want. And by pursuing it, you can make the world a better place. That seems a better story than the one of terror, insecurity and jealousy that we see play out online.

Odds and sods

You can hear this week's column as a podcast on Substack by clicking here.

Couple of pieces in the i paper this week. The first was on Starmer's plan to recognise Palestine and the second was on the attempt to bring influencers into government comms strategy. I'll be on BBC Radio 5 from 11am until noon today talking about the week in politics.

I've become obsessed with the new Skunk Anansie album, The Painful Truth. The 90s nostalgia of this summer is framed around Oasis, which is kind of disappointing given they're not releasing any new material and indeed haven't written a decent album since 1996. Other bands of the era are still churning out astonishingly good stuff - Blur, Pulp and Skunk Anansie in particular. The Painful truth is more mature than their early records, but if they released it in 1998 it would be considered a classic now. They are the same as they have ever been: angry, literate, expansive, eloquent, unafraid.

Skin is iconic. She is one of the last great rock stars. But there is something else which I want to briefly mention about her.

She recorded a video recently of an unsettling thing that happened to her while in a hotel. The thing that struck me was the quality of her voice when speaking. It has melody. It has these vast peaks and troughs, these rat-a-tat moments of speed followed by long stretches of leisurely pace.

I don't want to take this comparison too far, but the last time I felt this way was with Nina Simone. Her speech had that same sing-song quality, these incredible variations of pitch and rhythm. There is a good example of it here, in what might be my favourite interview of all time.

There are certain people who seem to vibrate musically, even when they are simply going about their daily life, who seem in contact with some kind of transcendent musicality even when chatting on a sofa or resting in bed. There aren't many contexts in which you can make valid comparisons with Simone. This is one of them.

Anyway, enough of that. See you next week.